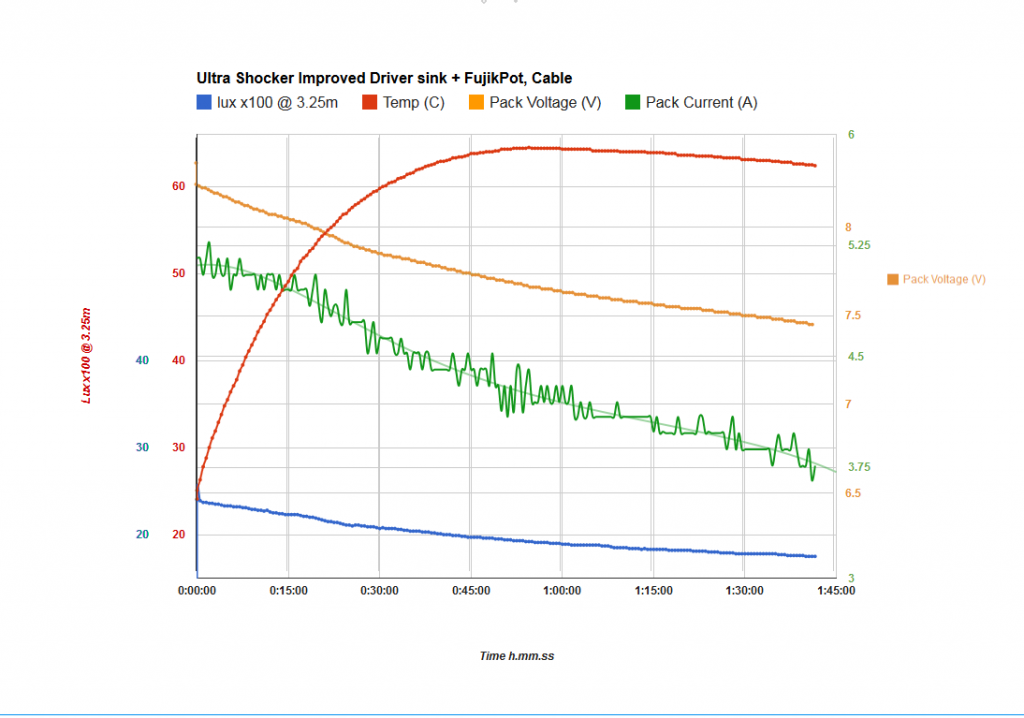

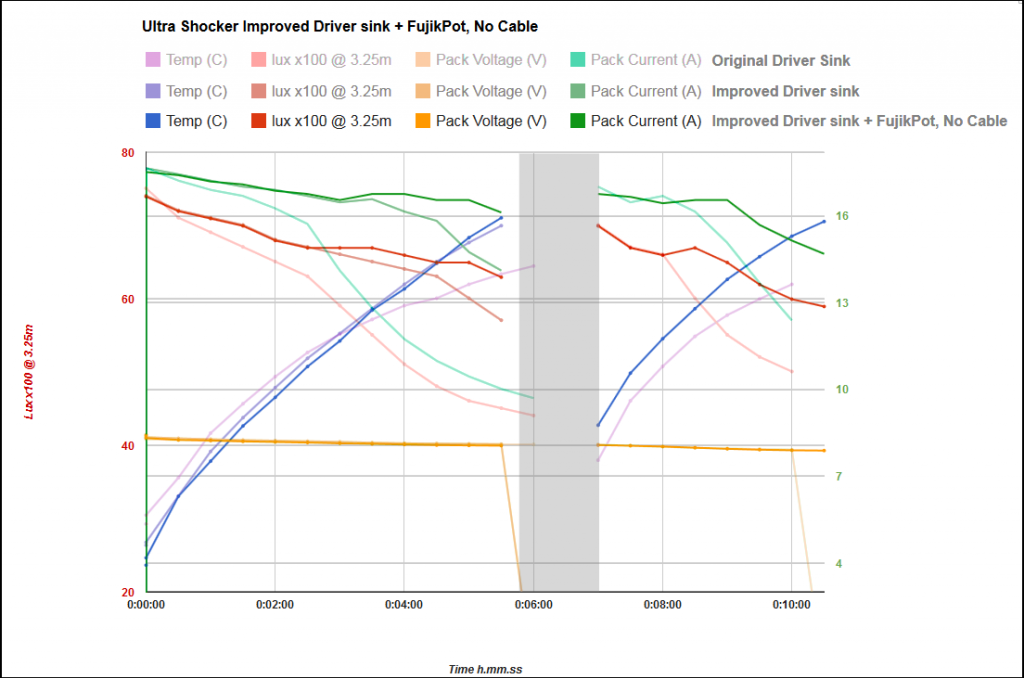

Ok, here are the final results after the fujik potting.

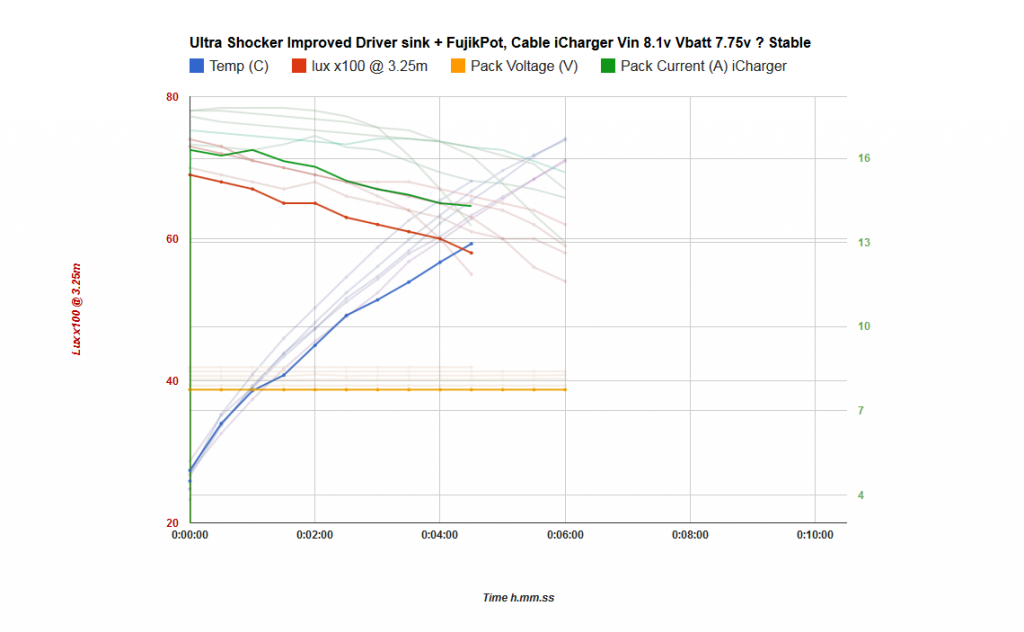

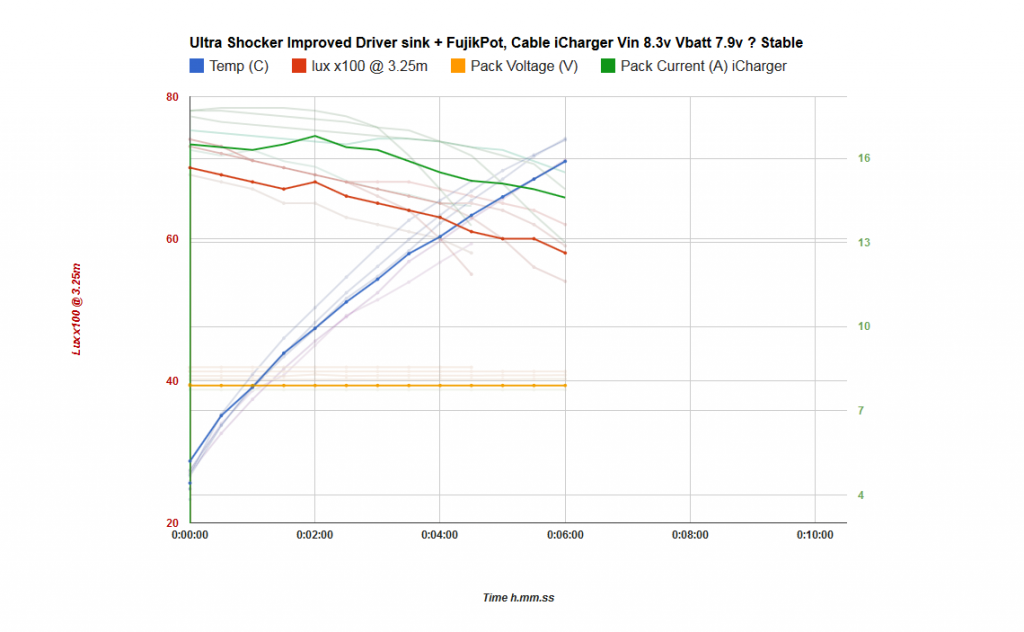

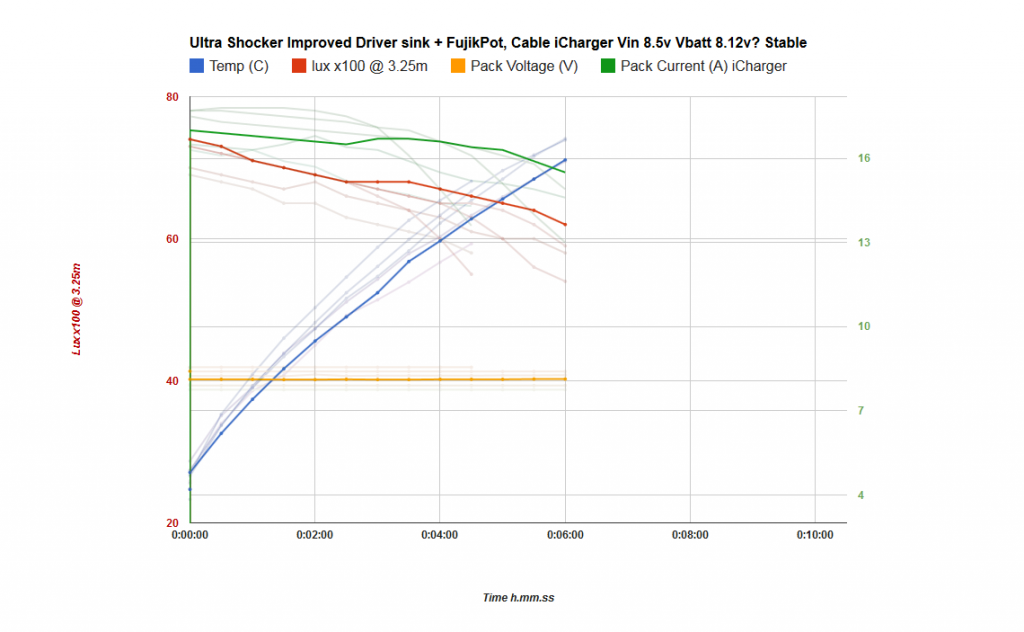

The graph is a bit of a mess because I overlaid the first two no-cable tests to show the direct differences that upgrading the driver heatsinking has made.

I’m glad to see that potting in fujik has shown another improvement, more subtle this time but still a worthwhile improvement resulting in a flatter overall current/output plot. Particularly obvious after the 3.5 minute mark where current seems better regulated (why it jumps up suddenly I have no idea, but I’ll take it!) and output is considerably higher than before as a result. The output heat cliff is pushed further back and is now very close to my 70degree temperature cutoff on the heat sink. When I called it a day at 5.5minutes I was still seeing 16.31 Amps draw. Nice!

Output heat sag at the emitters is obviously also a factor in all this, but even without considering the drop in current from the start of the test I’m only seeing about a 15% drop in lux over the course of the test. That’s pretty acceptable to me considering the exceptionally high power levels we’re talking about here.

I also tried to repeat the cool off and retest method of the very first test to see how things compare. I failed to get the light back to the same temperature but pack voltage and output was very similar. Actually they track amazingly well just after the turn on and even with a higher overall starting temperature it’s obvious that performance is maintained far better than before.

-

Overall I’m pretty pleased with the output characteristic of the light now. I still need to see how things have changed in the fully assembled light but this gives me confidence that the driver heatsinking related performance is now about as good as I can get it. Anything I can do to improve resistance losses from here will likely result mostly in higher output.

-

Unfortunately the bloody flicker is still present, it seems to start right around the 2.5 minute mark and looking closely at the offending emitter through the welding glass I see nothing at all dodgy with it. All array tiles are fully lit, very even and frankly I can’t directly see any flicker at all while looking at the emitter this way. It’s only really obvious when shining at a white wall and covering the other two reflector wells with my hand, it’s the kind of thing you catch in your peripheral vision more than when directly looking at the hotspot.

Well I was hoping it was the emitter that was obviously at fault, it might still be but if so only in a subtle way, maybe it will get worse and become more clear as the light is run in?

I think it’s most likely that I damaged a 7135 chip in the stacking process, either through bending the legs (not recommend I know but had to be done) or through applying too much heat. It may even heat up, flicker, go offline completely and then flicker back online as the overall temperature gets higher. Possibly a stretch but it might just explain the jump in drive current I see around the 3.5minute mark… ![]()

I dunno it’s too complicated with all these chips, trying to keep track of all the variables is doing my head in. The 7135s are probably all going through varying degrees of not working 100% right at these temperatures and all I’m seeing is the sum total if their combined agony! ![]()

I don’t really want to desolder the emitter wiring to swap over and test since that part of the light, i.e the ground flat insulated solder blobs and the alignment of the emitters is quite a pain to get right. I think I’ll live with the problem for now.

-

Edit: And here is the test setup. Got this going pretty well at this stage, I can just snap a photo with my other phone every 30secs and get all the data bar the lux reading in one easy snapshot. :bigsmile: